密度與效能效率攀上新高峰

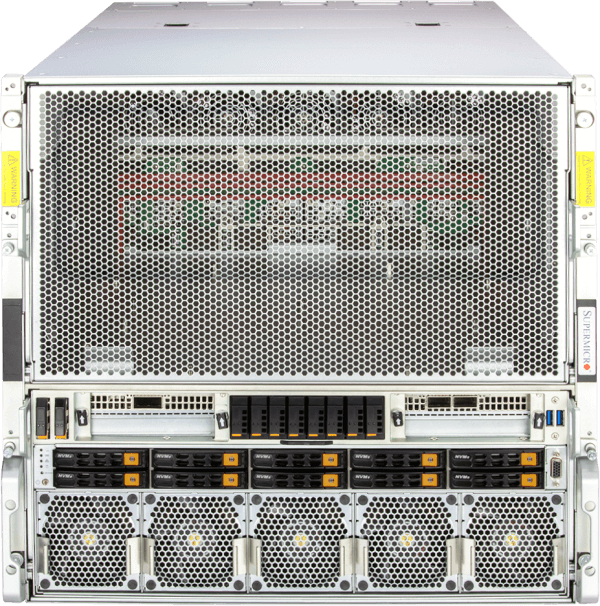

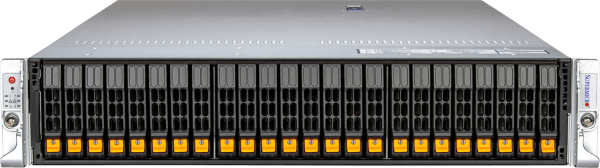

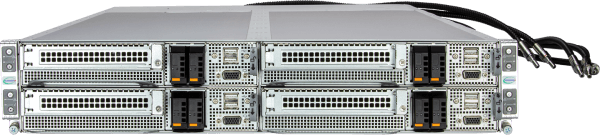

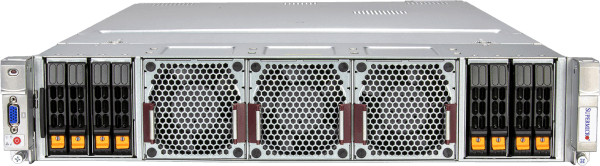

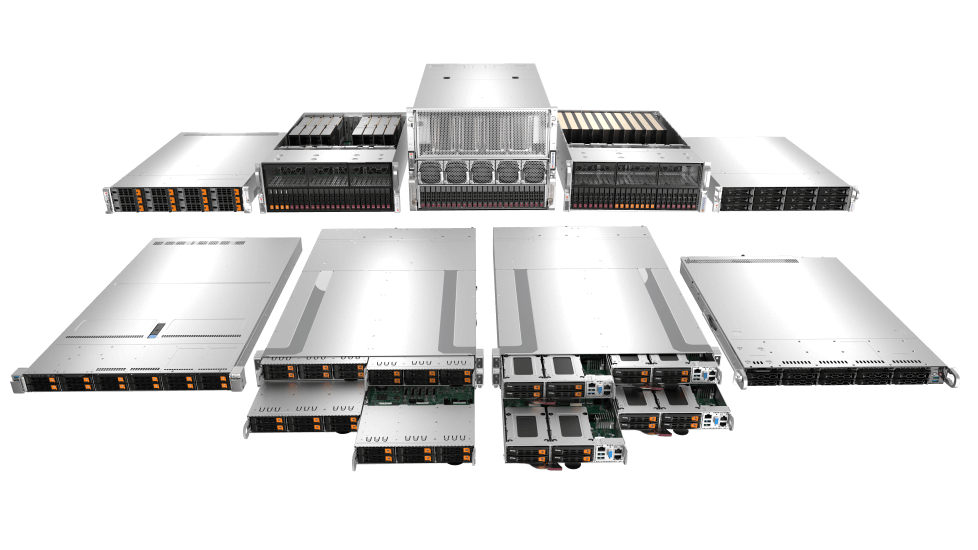

Supermicro全新改版的 H14 世代解決方案,為廣泛AI HPC 提供無與倫比的效能與靈活性。基於Supermicro成熟的模組化架構,這些解決方案協助企業客戶高效升級與擴展工作負載。Supermicro 業界最廣泛的AMD EPYC™伺服器產品組合,搭載最新EPYC 系列處理器,實現最高核心數、密度與效能。

H14世代伺服器搭載最新領先的GPU,AMD Instinct™MI350系列與即將推出的NVIDIAHGX™B200 GPU。全新H14世代伺服器CPU 器,真正為市場帶來AI解決方案。