大規模AI訓練與推論

大型語言模型、生成式AI訓練、自動駕駛、機器人

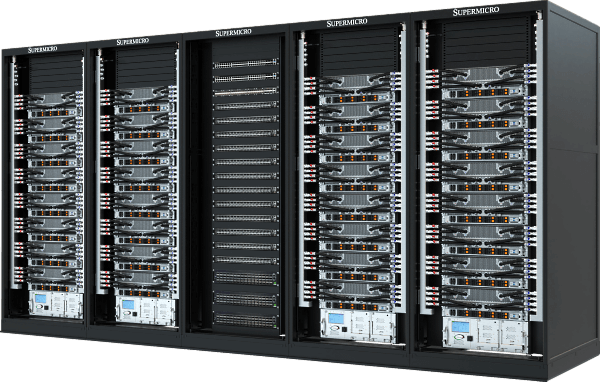

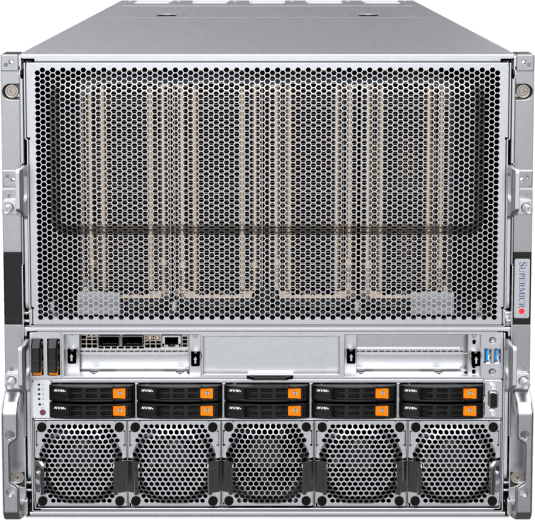

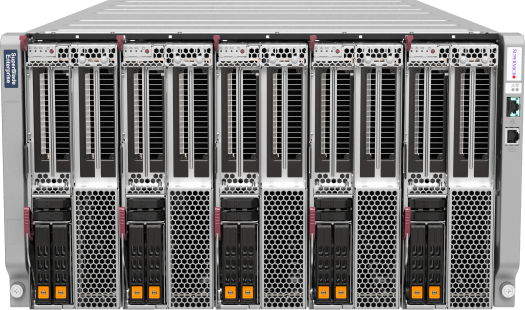

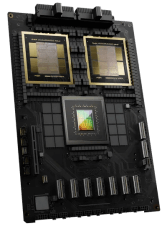

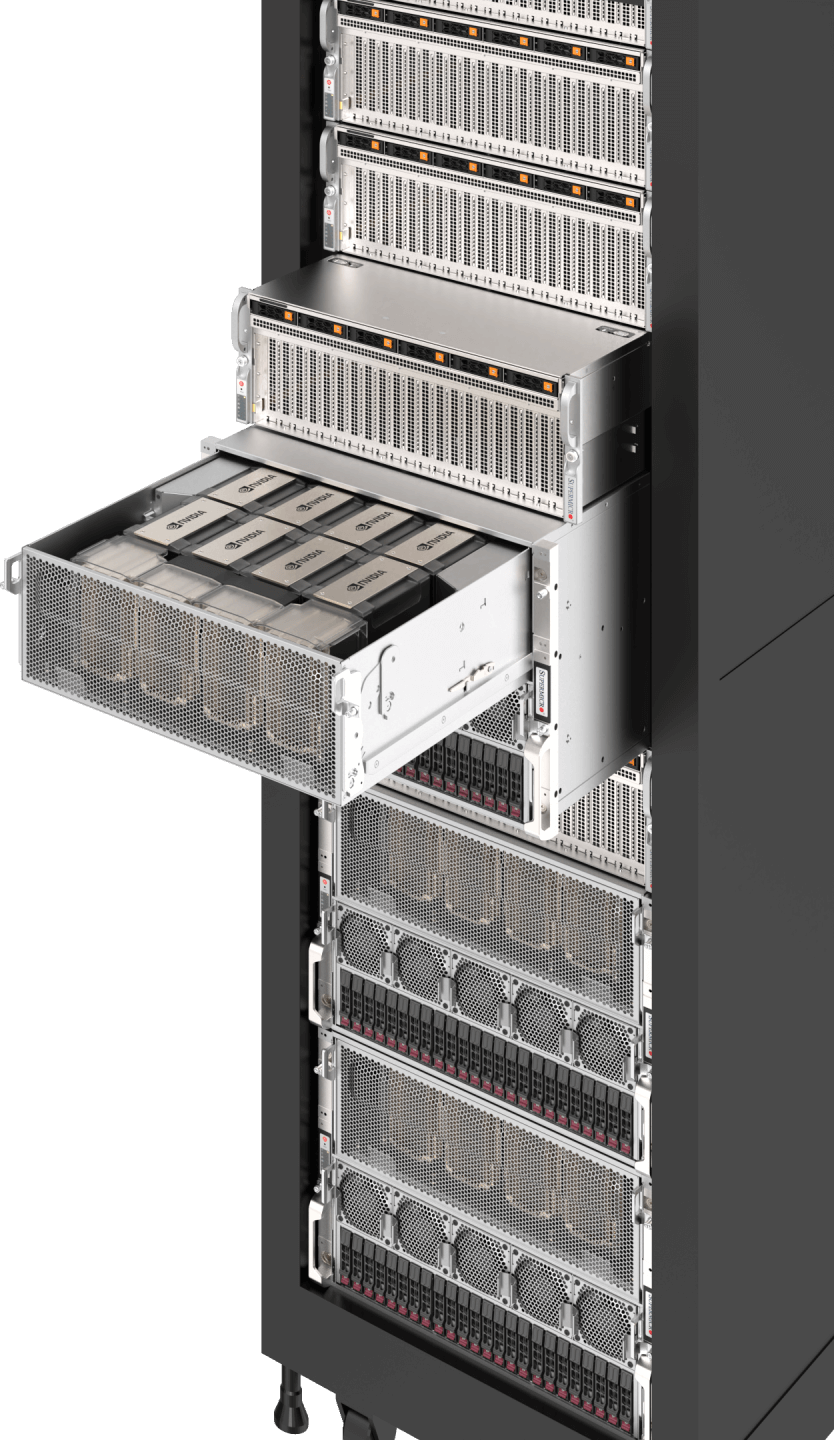

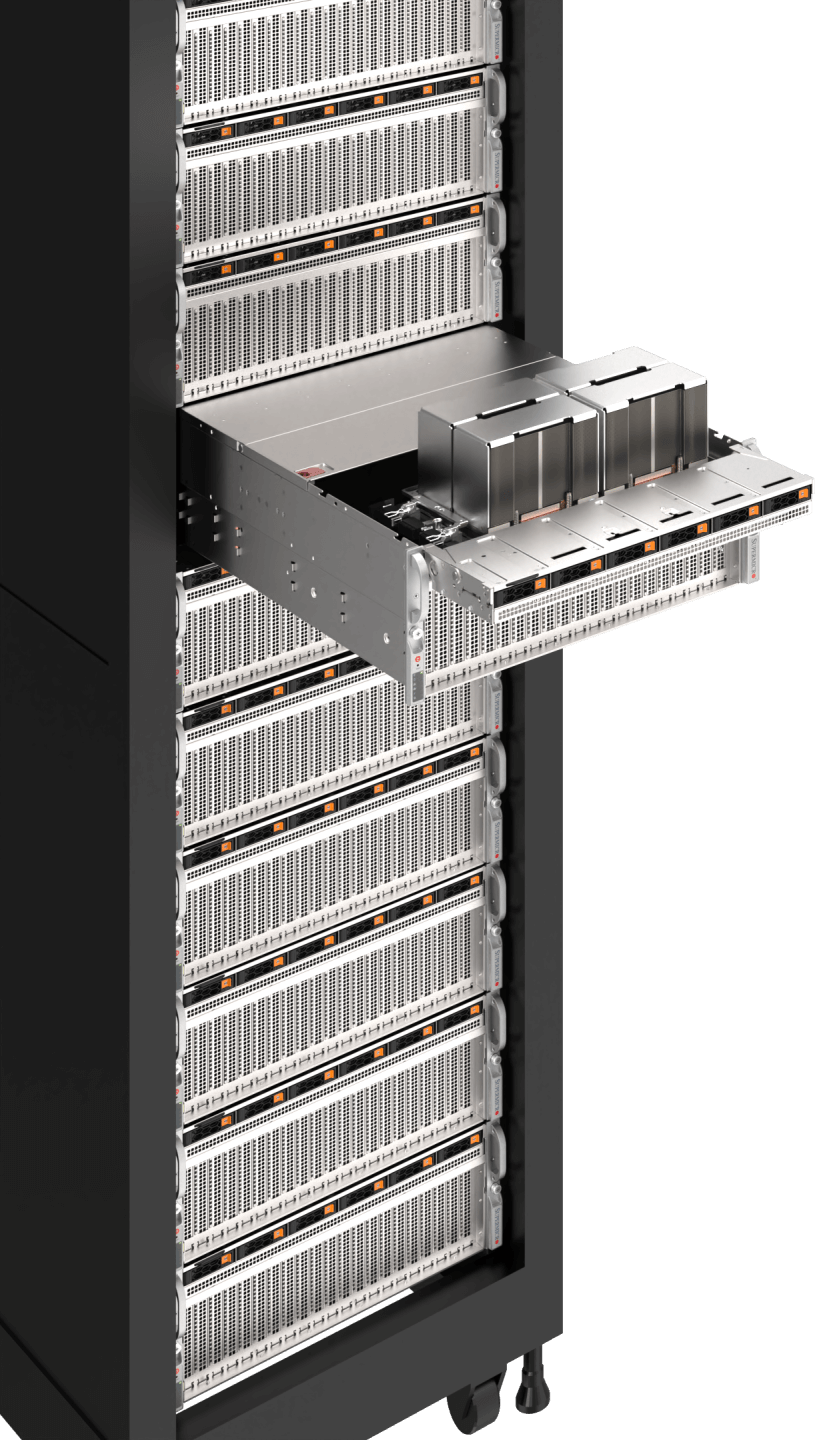

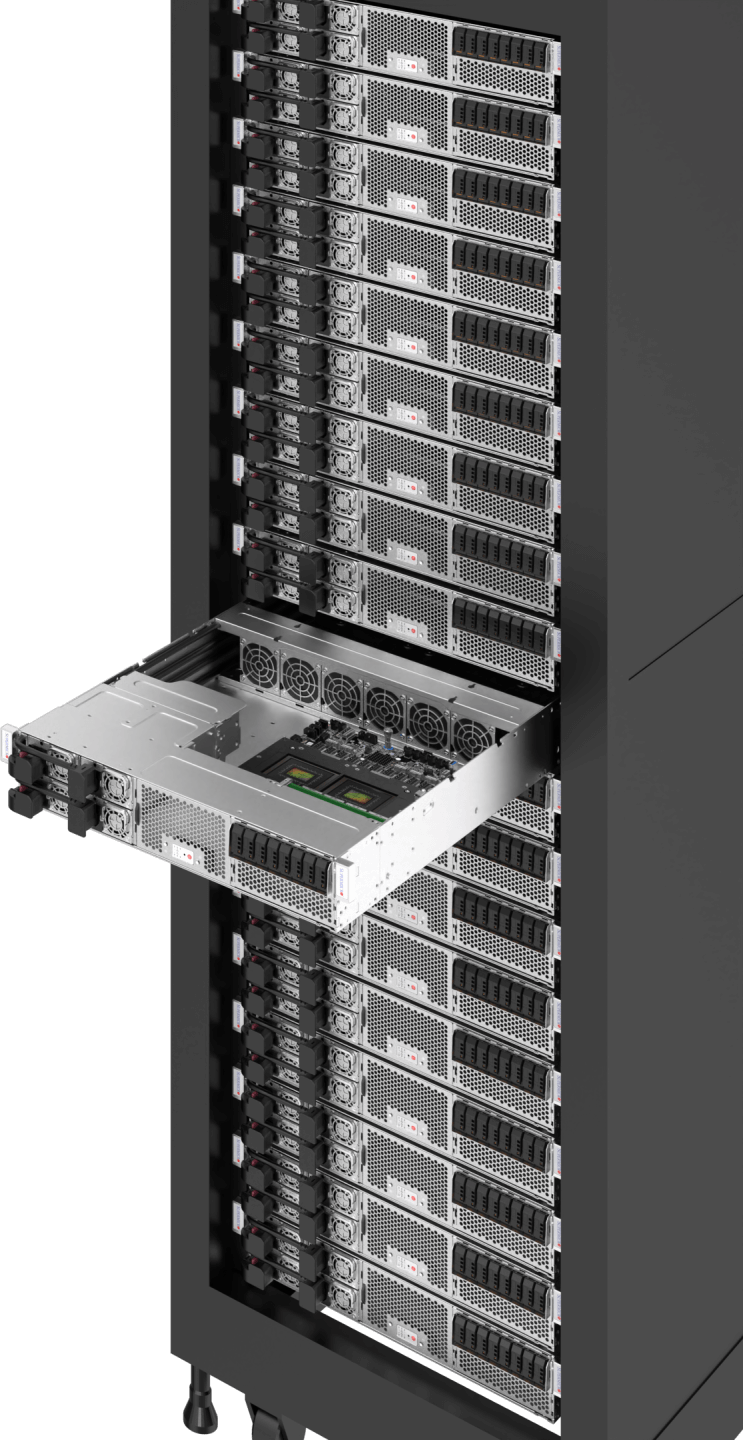

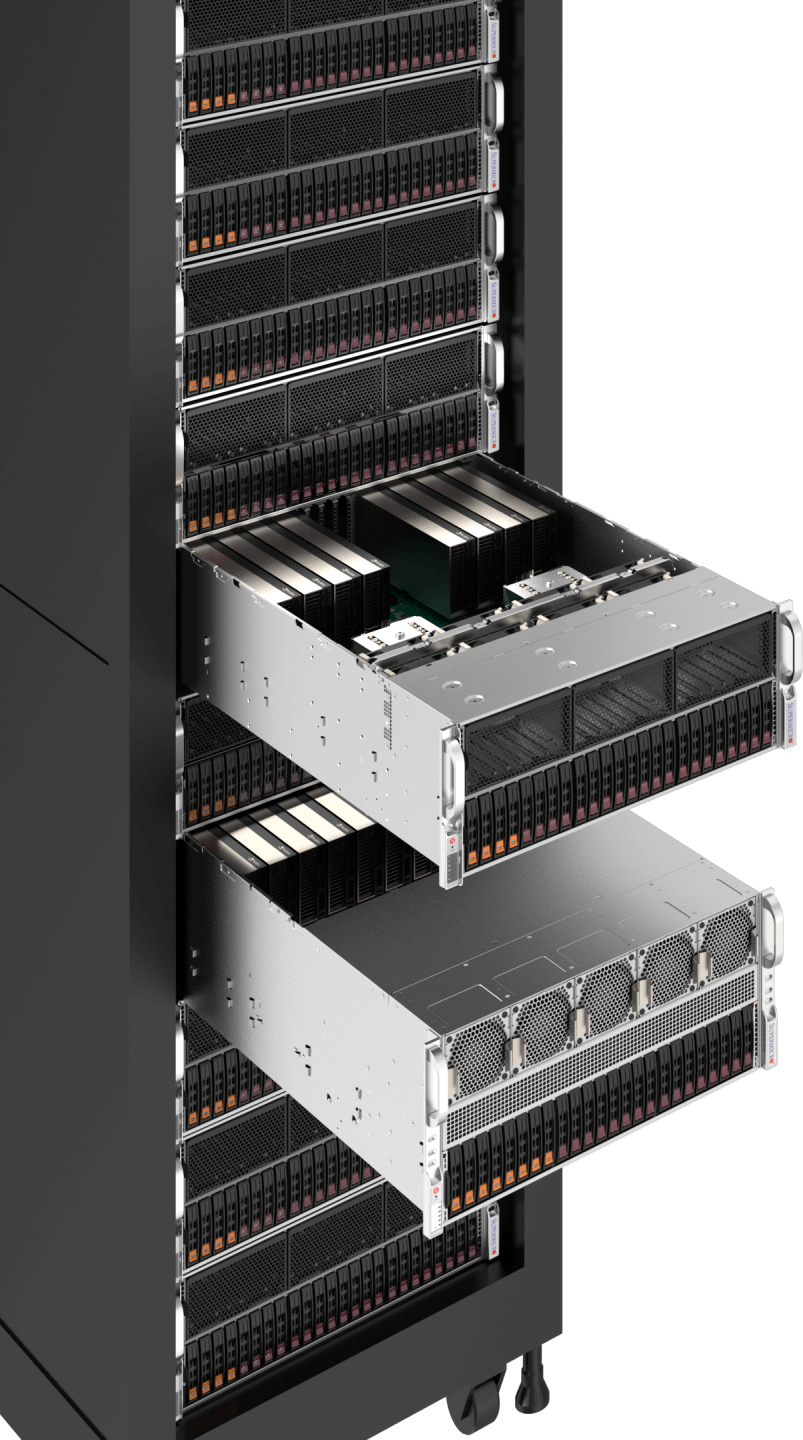

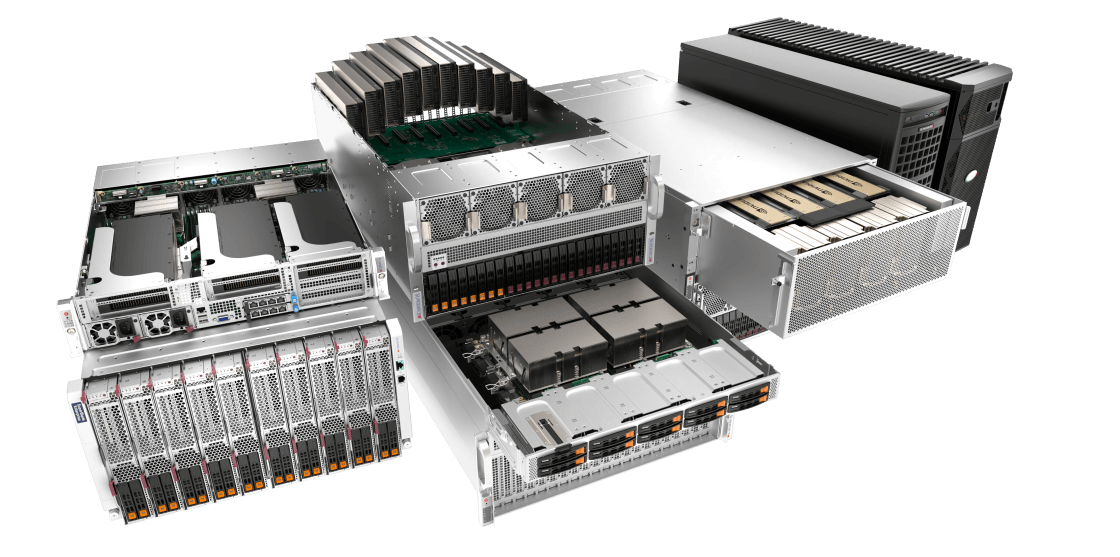

大規模的AI訓練需要最先進的技術,最大化GPU的平行運算能力,以處理數十億甚至數兆的AI模型參數,並使用大量的資料集進行訓練。Supermicro系統搭載了NVIDIA HGX™B200與GB200 NVL72,以及最快的NVLink®與NVSwitch® GPU-GPU互連技術(頻寬最高可達1.8TB/秒),並與每個GPU進行一對一相連,進而將節點叢集化。這些最佳化系統能夠從頭開始訓練大型語言模型,並將模型同時提供給數百萬名使用者。我們透過全快閃NVM來完成堆疊,實現快速的AI資料管道,進一步提供經完全整合的機架與液體冷卻技術,以確保快速部署與順暢無阻的AI訓練體驗。

工作負載規模

- 特大型

- 大型

- 中型

- 儲存

資源

HPC/AI

工程模擬、科學研究、基因組測序、藥物研究與發展

可助力加速科學家、研究人員和工程師的研發時間,同時,越來越多的HPC工作負載正在強化機器學習演算法,以及透過GPU加速的平行運算,以更快獲得成果。目前,全球許多最快的超級運算叢集都運用到GPU和AI技術。

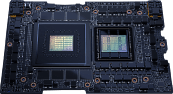

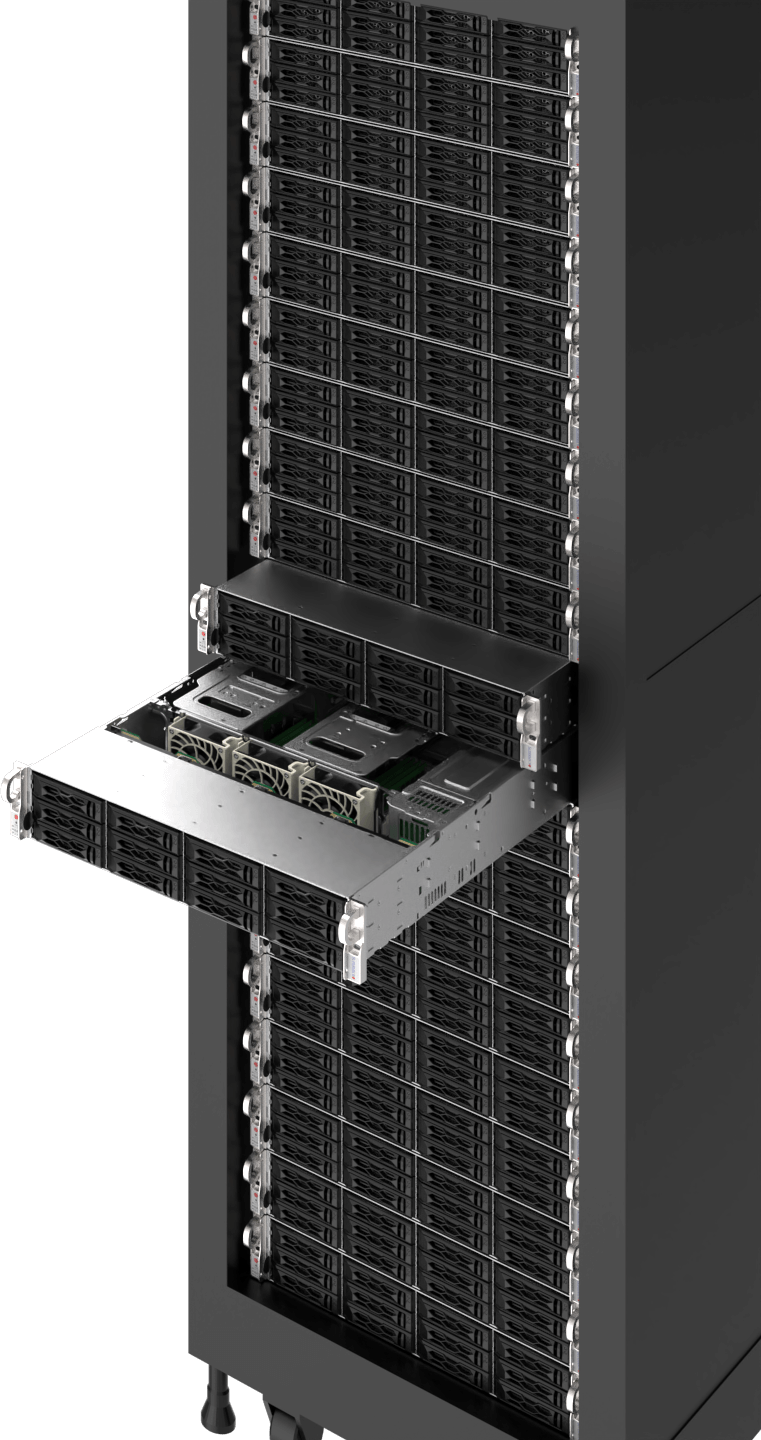

HPC工作負載通常需要進行資料密集型模擬與分析,並需要處理大量的資料集,以及提供高度精準性。GPU(如NVIDIA H100/H200)可提供前的雙精確度效能,且每GPU效能可達60 teraflops。Supermicro的高彈性HPC平台能支援多組GPU與CPU,確保在多種密集型機體規格內運行,並可進行機架規模整合與搭配液體冷卻技術。

工作負載規模

- 大型

- 中型

資源

企業型AI的推論與訓練

生成式AI推論、AI服務/應用程式、聊天機器人、推薦系統、商業自動化

生成式AI技術已成為科技、銀行、媒體等各類產業的全新趨勢。因AI技術是孕育創新、大幅提升生產力、簡化營運、以資料為導向的決策,以及改善客戶體驗的源頭,AI應用賽道已開啟序章。

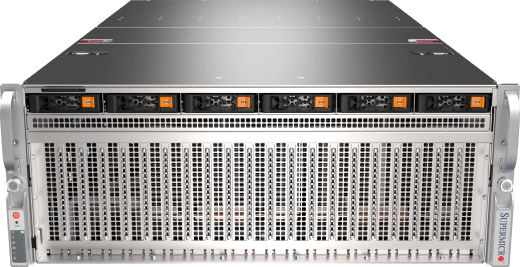

無論是AI應用程式與商業模式、用於客戶服務的智慧化擬真聊天機器人,或是AI協同的程式碼生成與內容創作,企業都可以運用開放式架構、程式庫、預先訓練的AI模型相關技術,並透過自有資料集,依據特殊專案需求,對這些架構、程式庫和模型進行微調。許多企業已開始打造AI基礎設施,而Supermicro多元的GPU最佳化系統可為這些企業提供開放式模組化架構、供應商靈活性,以及順暢的部署與設施升級途徑,進而導入更先進的技術。

工作負載規模

- 特大型

- 大型

- 中型

資源

視覺化與設計

即時協作、3D設計、遊戲開發

現今的GPU為3D圖形與AI應用程式提升了逼真度,進而加速工業的數位化。高真實度的3D模擬技術推動了產品開發與設計流程、製造,以及內容創作的轉型,進一步實現更好的品質、無機會成本的永續迭代,以及更快的上市時間。

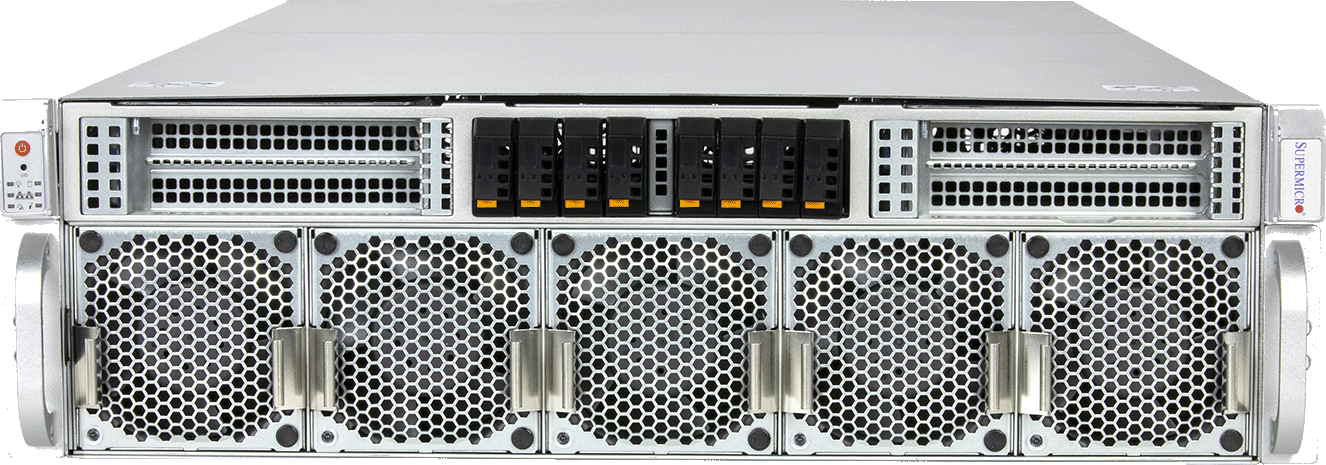

Supermicro全面整合解決方案,大規模建構虛擬製作基礎架構以加速產業數位化進程。解決方案包含:4U/5U 8-10 GPU系統、NVIDIAOVX™參考架構(針對NVIDIA Omniverse Enterprise進行優化並配備通用場景描述(USD)連接器),以及NVIDIA認證的機架式伺服器與多GPU工作站。

工作負載規模

- 大型

- 中型

資源

內容傳遞與虛擬化

內容傳遞網路(CDN)、轉碼、壓縮、雲端遊戲/串流

影片傳遞工作負載在目前的網路流量占比量仍然相當高。隨著串流服務供應商提供更多的4K和甚至8K的內容,或是螢幕更新率更高的雲端遊戲,結合媒體引擎的GPU加速變成了必須要素,為串流管線提供數倍的吞吐量效能,同時藉由AV1編碼和解碼等最新技術,維持更佳的視覺擬真度,並減少所需的資料量。

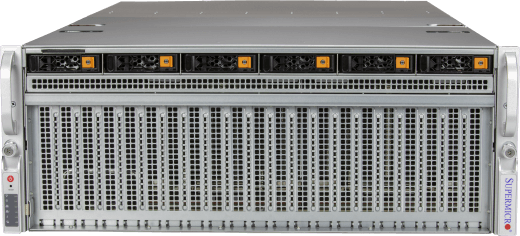

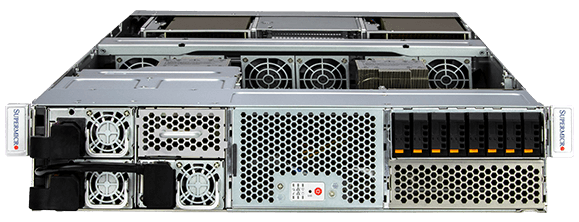

Supermicro的多節點與多GPU系統,例如2U 4節點BigTwin®系統,可滿足現代影片傳遞的嚴格需求。這些系統的每個節點都支援NVIDIA L4 GPU,並具備大量的PCIe Gen5儲存與網路速度,針對內容傳遞網路,因應需求嚴苛的資料管道。

工作負載規模

- 大型

- 中型

- 小

資源

邊緣AI

邊緣影片轉碼、邊緣推論、邊緣訓練

在不同產業內,不少企業的員工與客戶在城市、工廠、零售店、醫院等邊緣地點接觸與互動,其中,越來越多的企業開始投資在邊緣AI的部署 。透過在邊緣端處理資料和運行AI和ML演算法,企業能克服頻寬和延遲方面的限制,實現實時分析,進而及時做出決策、預測型看護、個人化服務,以及優化業務運作。

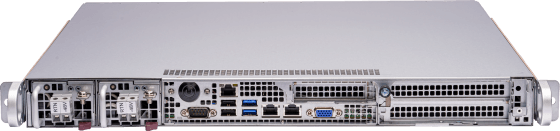

專為環境優化設計AI 具備多種緊湊機型,可提供低延遲、開放架構所需的效能。其預先整合的元件、多元的硬體與軟體堆疊相容性,以及開箱即用的隱私與安全功能集,皆能滿足複雜邊緣部署的需求。

工作負載規模

- 特大型

- 大型

- 中型

- 小

資源

COMPUTEX 2024 執行長主題演講