Accelerated Building Blocks with Intel GPUs

For Cloud Scale AI Training and Inference

Demand for high-performance AI/Deep Learning (DL) training compute has doubled in size every 3.5 months since 2013 (according to OpenAI) and is accelerating with the growing size of data sets and the number of applications and services based on large language models (LLMs), computer vision, recommendation systems, and more.

With the increased demand for greater training and inference performance, throughput, and capacity, the industry needs purpose-built systems that offer increased efficiency, lower cost, ease of implementation, flexibility to enable customization, and scaling of AI systems. AI has become an essential technology for diverse areas such as copilots, virtual assistants, manufacturing automation, autonomous vehicle operations, and medical imaging, to name a few. Supermicro has partnered with Intel to provide cloud scale system and rack design with Intel Gaudi AI Accelerators.

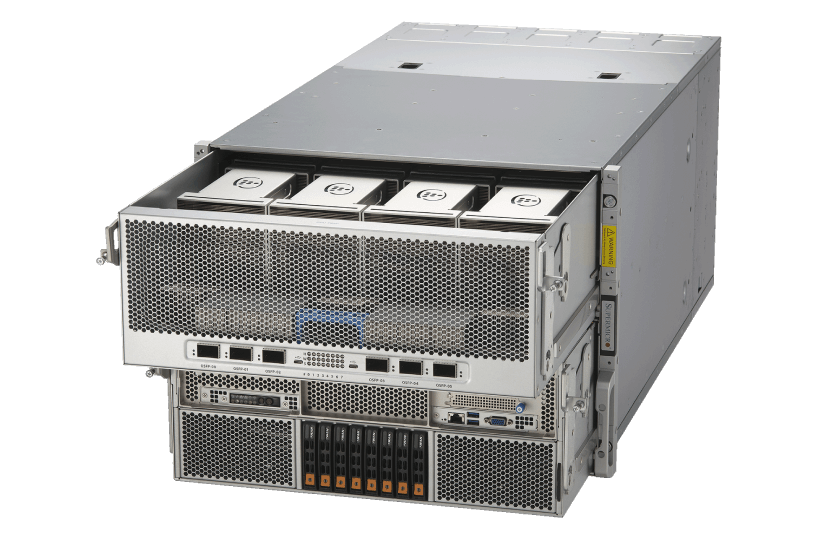

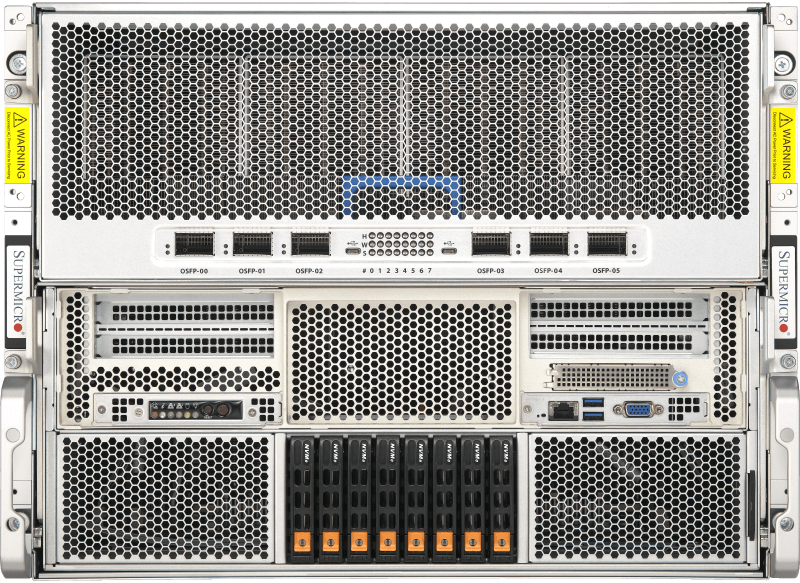

New Supermicro X14 Gaudi® 3 AI Training and Inference Platform

Bringing choice to the enterprise AI market, the new Supermicro X14 AI training platform is built on the third generation Intel® Gaudi 3 accelerators, designed to further increase the efficiency of large-scale AI model training and AI inferencing. Available in both air-cooled and liquid-cooled configurations, Supermicro's X14 Gaudi 3 solution easily scales to meet a wide range of AI workload requirements.

- GPU: 8 Gaudi 3 HL-325L (air-cooled) or HL-335 (liquid-cooled) accelerators on OAM 2.0 baseboard

- CPU: Dual Intel® Xeon® 6 processors

- Memory: 24 DIMMs - up to 6TB memory in 1DPC

- Drives: Up to 8 hot-swap PCIe 5.0 NVMe

- Power Supplies: 8 3000W high efficiency fully redundant (4+4) Titanium Level

- Networking: 6 on-board OSFP 800GbE ports for scale-out

- Expansion Slots: 2 PCIe 5.0 x16 (FHHL) + 2 PCIe 5.0 x8 (FHHL)

- Workloads: AI Training and Inference

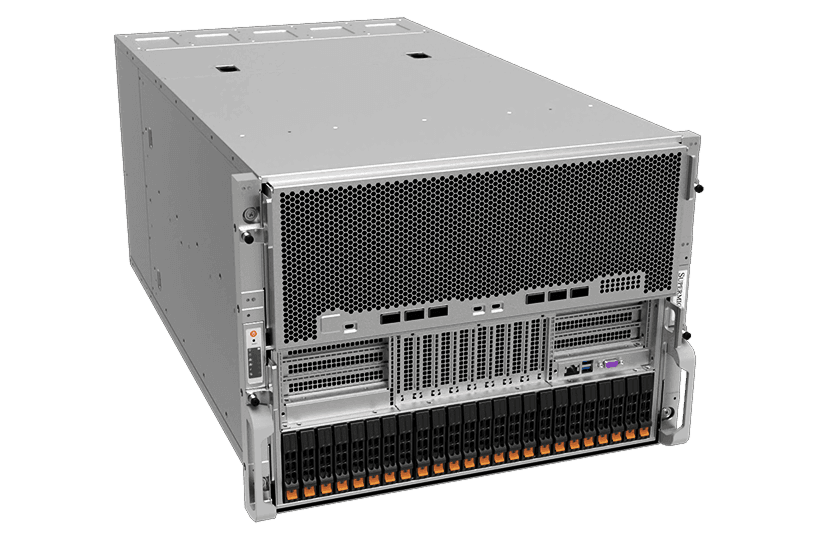

Supermicro Gaudi®2 AI Training Server

Building on the success of the original Supermicro Gaudi AI training system, the Gaudi 2 AI server prioritizes two key considerations: integrating AI accelerators with built-in high-speed networking modules to drive operation efficiency for training state-of-the-art AI models and bringing the AI industry the choice it needs.

- GPU: 8 Gaudi2 HL-225H mezzanine cards

- CPU: Dual 3rd Gen Intel® Xeon® Scalable processors

- Memory: 32 DIMMs - up to 8TB registered ECC DDR4-3200MHz SDRAM

- Drives: up to 24 hot-swap drives (SATA/NVMe/SAS)

- Power: 6x 3000W High efficiency (54V+12V) fully-redundant power supplies

- Networking: 24x 100GbE (48 x 56Gb) PAM4 SerDes Links by 6 QSFP-DDs

- Expansion Slots: 2x PCIe 4.0 switches

- Workloads: AI Training and Inference

Supermicro Server With the Intel® Gaudi® 3 Is Optimized for Real-World AI Scenarios

New Supermicro Servers with Intel® Xeon® 6 series processors with P-Cores and Intel Gaudi 3 Accelerators Show Performance Gains Over Previous Generations

Supermicro X14 Intel® Gaudi® AI Accelerator Cluster Reference Design

Accelerating and Driving Down the Cost of AI Solutions with Supermicro's X14 Intel® Gaudi® 3 Accelerator Based System by Building on Open-Source Software and Industry-Standard Ethernet

Supermicro with GAUDI 3 AI Delivers Scalable Performance for AI Requirements

Range of Optimized Solutions for Data Centers of Any Size and Workloads For New Services and Increased Customer Satisfaction

Supermicro and Intel GAUDI 3 Systems Advance Enterprise AI Infrastructure

High_Bandwidth AI System Using Intel Xeon 6 Processors for Efficient LLM and GenAI Training and Inference Across Enterprise Scales

Supermicro X13 Hyper Empowers Enterprise AI Workloads on the VMWARE Platform

Computational AI workload Use Cases: Large Language Model (LLM) and AI Image Recognition - ResNet50 on Intel® Data Center Flex 170 GPU

Accelerating AI Compute With Supermicro Servers In The INTEL® Developer Cloud

Supermicro Advanced AI Servers featuring Intel® Xeon® Processors and Intel® Gaudi® 2 AI Accelerators Bring High-Performance, High-efficiency AI Cloud Compute, Training, and Inferencing to Developers and Enterprises

Superior Media Processing and Delivery Solution Based On Supermicro Servers W/ Intel® Data Center GPU Flex Series

Supermicro Systems with Intel® Data Center GPU Flex Series

Supermicro TECHTalk: New Media Processing Solutions Based on Intel Data Center GPU Flex Series

Watch as our product experts discuss the new Supermicro solutions based on the just announced Intel Data Center GPU Flex Series. Learn how these solutions can help benefit you and your company.

Delivering Scalable Cloud-Gaming

Supermicro Systems with Intel® Data Center GPU Flex Series

Supermicro offers all the system components for cloud service providers to build green, cost-effective, and profitable cloud gaming infrastructure.

Innovative Solutions for Cloud Gaming, Media, Transcoding, & AI Inferencing

Sep 08 2022, 10:00am PDT

Supermicro and Intel product and solution experts will discuss, in an informal session, the benefits of the solutions in the areas of Cloud Gaming, Media Delivery, Transcoding, and AI Inferencing using the recently announced Intel Flex Series GPUs. The webinar will explain the advantages of the Supermicro solutions, the ideal servers and the benefits of using the Intel Flex Series GPUs.

Supermicro and Habana® High-Performance, High-Efficiency AI Training System

Enabling up to 40% better price/performance for Deep Learning training than traditional AI solutions