Accelerating Your Discoveries

At Supermicro, we take pride in building HPC solutions from the ground up. From custom design to implementation, our dedicated teams offer plug-and-play services optimizing every aspect of each solution to meet and exceed even the most daunting challenges in HPC, while also simplifying the deployment of supercomputers.

High Performance Computing (HPC) is a computational domain that aims to solve complex problems through parallel processing. An HPC system of today consists of hundreds to thousands of CPUs connected through a high speed network. A large HPC cluster of servers can be assigned to work on a single large problem simultaneously or several smaller problems. Many HPC applications are currently written to simultaneously take advantage of tens, hundreds, and thousands of cores, resulting in orders of magnitude lower time to solution. Supermicro designs a range of high performance computing solutions to fit every need. Supermicro's HPC solutions are expertly designed, built, and tested by Supermicro professionals. Liquid cooling is available at rack scale for environments where liquid cooling is required.

Many fields can use HPC technologies, including:

- Engineering – designing and optimizing new physical products, including automobiles, planes, structures, and consumer appliances.

- Scientific Research – basic and applied research to model climate change, more accurate weather forecasts, galaxy and star formation, and weapons modernization.

- Finance – fast decisions based on very low latency calculations to make trading decisions

- Defense – create battlefield scenarios and produce optimized weapons (engineering)

- Healthcare – design new drugs, create personalized care, and recognize disease causes and possible contributors to these diseases.

The Supermicro HPC Solutions portfolio consists of HPC servers, HPC storage solutions, HPC networking, and life cycle monitoring software. Together, these technologies all contribute towards an entire high performance computing solution that addresses the needs of HPC users.

HPC Solutions

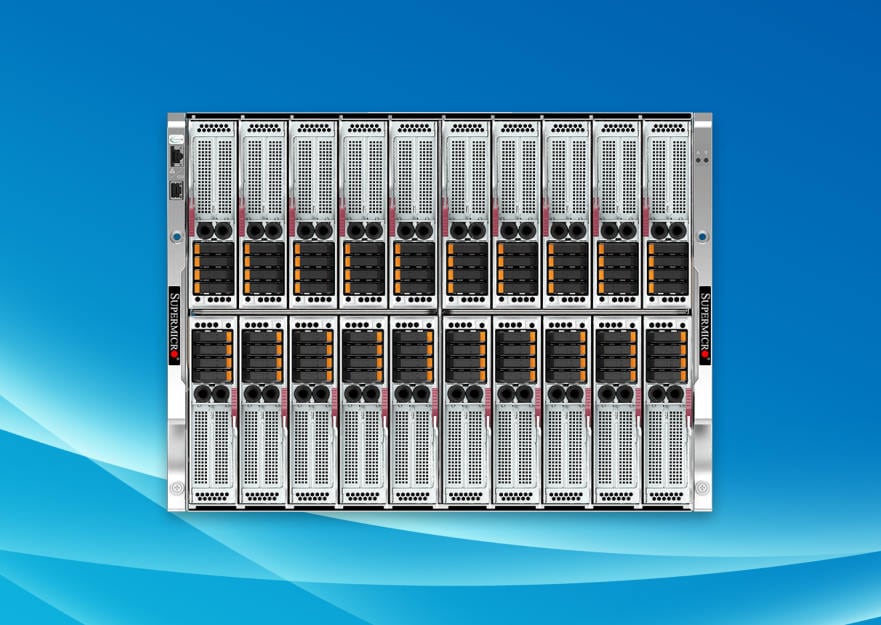

Supermicro Reference Architecture is designed to fulfill your unique HPC requirements. Our advantages include a wide range of building blocks, from motherboard design, to system configuration, fully integrated rack, and liquid cooling systems. We focus on providing solutions tailored to the customers’ specific needs.

Supermicro Technologies for HPC Environments

Supermicro technologies for HPC – Supermicro offers a wide range of serves and storage systems for the most demanding HPC environments.

HPC in Manufacturing (EDA, FEA, CFD)

High-Performance Computing (HPC) is revolutionizing manufacturing by enabling complex simulations, data analysis, and optimization. Manufacturers leverage HPC to design and test products virtually, reducing physical prototypes and accelerating time-to-market. Additionally, HPC optimizes production processes by identifying bottlenecks, predicting equipment failures, and improving energy efficiency. From material science to supply chain management, HPC empowers manufacturers to make data-driven decisions, enhance product quality, and gain a competitive edge in an increasingly complex global market.

HPC in Life Sciences/Medical

High-Performance Computing (HPC) is transforming the life sciences industry by accelerating drug discovery, genomic research, and personalized medicine. Researchers leverage HPC to analyze vast biological datasets, simulate complex molecular interactions, and predict drug efficacy. This technology enables faster development of new treatments, better understanding of diseases, and improved patient outcomes. From identifying potential drug targets to optimizing clinical trials, HPC is essential for driving innovation and advancing human health.

HPC in Research/Govt

High-Performance Computing (HPC) is a cornerstone of modern research, accelerating discoveries across disciplines. From simulating the complexities of the human genome to modeling climate change, HPC enables researchers to tackle previously intractable problems. By processing vast amounts of data and performing complex calculations at unprecedented speeds, HPC empowers scientists to uncover new insights, develop innovative solutions, and push the boundaries of human knowledge. Whether exploring the cosmos, developing new materials, or advancing medical treatments, HPC is an indispensable tool for driving scientific progress.

HPC in Financial Services

High-Performance Computing (HPC) is a cornerstone of the financial services industry, enabling complex calculations, risk modeling, and fraud detection at unprecedented speeds. Financial institutions harness HPC to process vast datasets, identify market trends, optimize investment portfolios, and simulate economic scenarios. This technology empowers firms to make faster, more informed decisions, manage risk effectively, and deliver personalized services to clients. From algorithmic trading to regulatory compliance, HPC underpins the core operations of modern financial institutions.

HPC in Energy

High-Performance Computing (HPC) is a catalyst for innovation in the energy sector. It empowers researchers to model complex energy systems, simulate renewable energy sources, and optimize power grids for efficiency and reliability. From exploring new materials for energy storage to predicting energy demand, HPC accelerates the development of clean and sustainable energy solutions. Additionally, HPC plays a crucial role in optimizing oil and gas exploration, refining processes, and carbon capture technologies, contributing to a more resilient and environmentally friendly energy future.

Rack-Scale Integration

Effortless HPC Cluster Deployment with our Datacenter Professional Services

Success Stories

Supermicro can tailor HPC solutions to meet any variety of workloads: compute intensive, high throughput GPUs, or high capacity storage applications used in different industries. Supermicro HPC solutions can be bundled with various open-source platforms and commercial applications with proven successes.