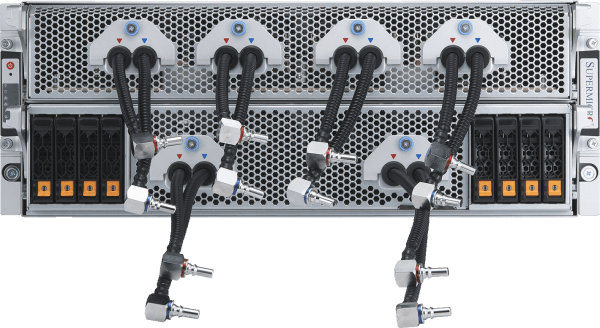

Leading Liquid-Cooled AI Cluster

With 32 NVIDIA HGX H100/H200 8-GPU, 4U Liquid-cooled Systems (256 GPUs) in 5 Racks

- Doubling compute density through Supermicro’s custom liquid-cooling solution with up to 40% reduction in electricity cost for data center

- 256 NVIDIA H100/H200 GPUs in one scalable unit

- 20TB of HBM3 with H100 or 36TB of HBM3e with H200 in one scalable unit

- 1:1 networking to each GPU to enable NVIDIA GPUDirect RDMA and Storage for training large language model with up to trillions of parameters

- Customizable AI data pipeline storage fabric with industry leading parallel file system options

- Supports NVIDIA Quantum-2 InfiniBand and Spectrum-X Ethernet platform

- Certified for NVIDIA AI Enterprise Platform including NVIDIA NIM microservices

Compute Node

Featured Resources