In the era of AI, a unit of compute is no longer measured by just the number of servers. Interconnected GPUs, CPUs, memory, storage, and these resources across multiple nodes in racks construct today's artificial intelligence. The infrastructure requires high-speed and low-latency network fabrics, and carefully designed cooling technologies and power delivery to sustain optimal performance and efficiency for each data center environment. Supermicro’s SuperCluster solution provides end-to-end AI data center solutions for rapidly evolving Generative AI and Large Language Models (LLMs).

Complete Integration at Scale

Design and build of full racks and clusters with a global manufacturing capacity of up to 5,000 racks per month

Test, Validate, Deploy with On-site Service

Proven L11, L12 testing processes thoroughly validate the operational effectiveness and efficiency before shipping

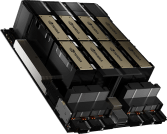

Liquid Cooling/Air Cooling

Fully integrated liquid-cooling or air cooling solution with GPU & CPU cold plates, Cooling Distribution Units and Manifolds

Supply and Inventory Management

One-stop-shop to deliver fully integrated racks fast and on-time to reduce time-to-solution for rapid deployment

The full turn-key data center solution accelerates time-to-delivery for mission-critical enterprise use cases, and eliminates the complexity of building a large cluster, which previously was achievable only through the intensive design tuning and time-consuming optimization of supercomputing.

Liquid-Cooled 2-OU NVIDIA HGX B300 AI Cluster

Fully integrated liquid-cooled 144-node cluster with up to 1152 NVIDIA B300 GPUs

- Unmatched AI training performance density from NVIDIA HGX B300 with compact 2-OU liquid-cooled system nodes

- Supermicro Direct Liquid Cooling featuring 1.8MW capacity in-row CDUs (in-rack CDU options available)

- Large HBM3e GPU memory capacity (288GB* of HBM3e memory per GPU) and system memory footprint for foundation model training

- Scale-out with NVIDIA Quantum-X800 InfiniBand for ultra-low-latency, high-bandwidth AI fabrics

- Dedicated storage fabric options with full NVIDIA GPUDirect RDMA and Storage or RoCE support

- Designed to fully support NVIDIA AI Software Platforms, including NVIDIA AI Enterprise and NVIDIA Run:ai

Compute Node

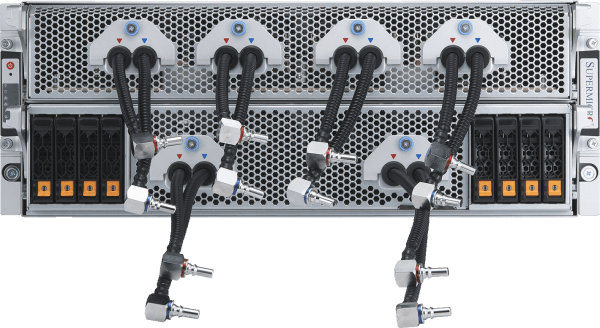

Liquid-Cooled 4U NVIDIA HGX B300 AI Cluster

Fully integrated liquid-cooled 72-node cluster with up to 576 NVIDIA B300 GPUs

- Deploy high-performance AI training and inference with NVIDIA HGX B300 optimized for compute density and serviceability

- Supermicro Direct Liquid Cooling designed for sustained high-power operation and improved energy efficiency

- Large HBM3e GPU memory capacity (288GB* of HBM3e memory per GPU) and system memory footprint for foundation model training

- Scale-out with NVIDIA Spectrum™-X Ethernet or NVIDIA Quantum-X800 InfiniBand

- Dedicated storage fabric options with full NVIDIA GPUDirect RDMA and Storage or RoCE support

- Designed to fully support NVIDIA AI Software Platforms, including NVIDIA AI Enterprise and NVIDIA Run:ai

Compute Node

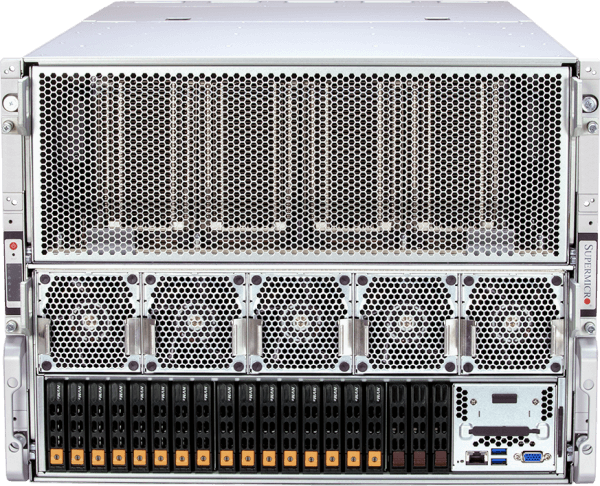

Air-Cooled 8U NVIDIA HGX B300 AI Cluster

Fully integrated air-cooled 72-node cluster with up to 576 NVIDIA B300 GPUs

- Deploy scalable AI training and inference with NVIDIA HGX B300 in an air-cooled design for broader data center compatibility

- Optimized airflow and thermal design enabling high-performance operation without liquid cooling infrastructure

- Large HBM3e GPU memory capacity (288GB* of HBM3e memory per GPU) and system memory footprint for foundation model training

- Scale-out with NVIDIA Spectrum™-X Ethernet or NVIDIA Quantum-X800 InfiniBand

- Dedicated storage fabric options with full NVIDIA GPUDirect RDMA and Storage or RoCE support

- Designed to fully support NVIDIA AI Software Platforms, including NVIDIA AI Enterprise and NVIDIA Run:ai

Compute Node

Liquid-Cooled NVIDIA HGX B200 AI Cluster

With up to 32 NVIDIA HGX B200 8-GPU, 4U Liquid-cooled Systems (256 GPUs) in 5 Racks

- Deploy the pinnacle of AI training and inference performance with 256 NVIDIA B200 GPUs in one scalable unit (5 racks)

- Supermicro Direct Liquid Cooling featuring 250kW capacity in-rack Coolant Distribution Unit (CDU) with redundant PSU and dual hot-swap pumps

- 45 TB of HBM3e memory in one scalable unit

- Scale-out with 400Gb/s NVIDIA Spectrum™-X Ethernet or NVIDIA Quantum-2 InfiniBand

- Dedicated storage fabric options with full NVIDIA GPUDirect RDMA and Storage or RoCE support

- Designed to fully support NVIDIA AI Software Platforms, including NVIDIA AI Enterprise and NVIDIA Run:ai

Compute Node

Air-Cooled NVIDIA HGX B200 AI Cluster

With 32 NVIDIA HGX B200 8-GPU, 10U Air-cooled Systems (256 GPUs) in 9 Racks

- Proven industry leading architecture with new thermally-optimized air-cooled system platform

- 45 TB of HBM3e memory in one scalable unit

- Scale-out with 400Gb/s NVIDIA Spectrum-X Ethernet or NVIDIA Quantum-2 InfiniBand

- Dedicated storage fabric options with full NVIDIA GPUDirect RDMA and Storage or RoCE support

- NVIDIA Certified system nodes, fully supporting NVIDIA AI Software Platforms, including NVIDIA AI Enterprise and NVIDIA Run:ai

Compute Node

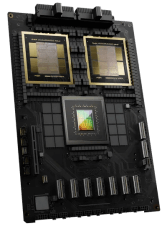

NVIDIA GB200 NVL72

Liquid-cooled Exascale Compute in a Single Rack

- 72x NVIDIA Blackwell B200 GPUs acting as one GPU with a massive pool of HBM3e memory (13.5TB per rack)

- 9x NVLink Switch, 4 ports per compute tray connecting 72 GPUs to provide 1.8TB/s GPU-to-GPU interconnect

- Supermicro Direct Liquid Cooling featuring 250kW capacity in-rack Coolant Distribution Unit (CDU) with redundant PSU and dual hot-swap pumps

- Dedicated storage fabric options with full NVIDIA GPUDirect RDMA and Storage or RoCE support

- Scale-out with 400Gb/s NVIDIA Spectrum™-X Ethernet or NVIDIA Quantum-2 InfiniBand

- Designed to fully support NVIDIA AI Software Platforms, including NVIDIA AI Enterprise and NVIDIA Run:ai

Compute Tray

- ARS-121GL-NBO-LCC

(not sold individually)

Leading Liquid-Cooled AI Cluster

With 32 NVIDIA HGX H100/H200 8-GPU, 4U Liquid-cooled Systems (256 GPUs) in 5 Racks

- Doubling compute density through Supermicro’s custom liquid-cooling solution with up to 40% reduction in electricity cost for data center

- 256 NVIDIA H100/H200 GPUs in one scalable unit

- 20TB of HBM3 with H100 or 36TB of HBM3e with H200 in one scalable unit

- Dedicated storage fabric options with full NVIDIA GPUDirect RDMA and Storage or RoCE support

- Scale-out with 400Gb/s NVIDIA Spectrum™-X Ethernet or NVIDIA Quantum-2 InfiniBand

- NVIDIA Certified system nodes, fully supporting NVIDIA AI Software Platforms, including NVIDIA AI Enterprise and NVIDIA Run:ai

Compute Node

Proven Design

With 32 NVIDIA HGX H100/H200 8-GPU, 8U Air-cooled Systems (256 GPUs) in 9 Racks

- Proven industry leading architecture for large scale AI infrastructure deployments

- 256 NVIDIA H100/H200 GPUs in one scalable unit

- 20TB of HBM3 with H100 or 36TB of HBM3e with H200 in one scalable unit

- Scale-out with 400Gb/s NVIDIA Spectrum™-X Ethernet or NVIDIA Quantum-2 InfiniBand

- Customizable AI data pipeline storage fabric with industry leading parallel file system options

- NVIDIA Certified system nodes, fully supporting NVIDIA AI Software Platforms, including NVIDIA AI Enterprise and NVIDIA Run:ai

Compute Node